Generative AI (GenAI) and large language models (LLMs) are revolutionizing software development by automating coding tasks from debugging to workflow optimization. This ongoing revolution is shifting practices from traditional model-driven development to AI-powered tools like GitHub Copilot or Codeium. While these tools promise efficiency, understanding the strengths and limitations of GenAI is essential to make sure it is used effectively and responsibly.

Responsible AI - among others - addresses ethical, legal and transparency concerns in code generation. Explainability aims to clarify the black box nature of these models. There are also various robustness challenges as well, like the threat of prompt injection, generating vulnerable code, or dependency hallucinations - just to name a few.

Developers should integrate GenAI responsibly into software development by addressing these risks, adopting rigorous assessment, and promoting secure coding practices.

In this webinar you will learn:

Nyttig informasjon:

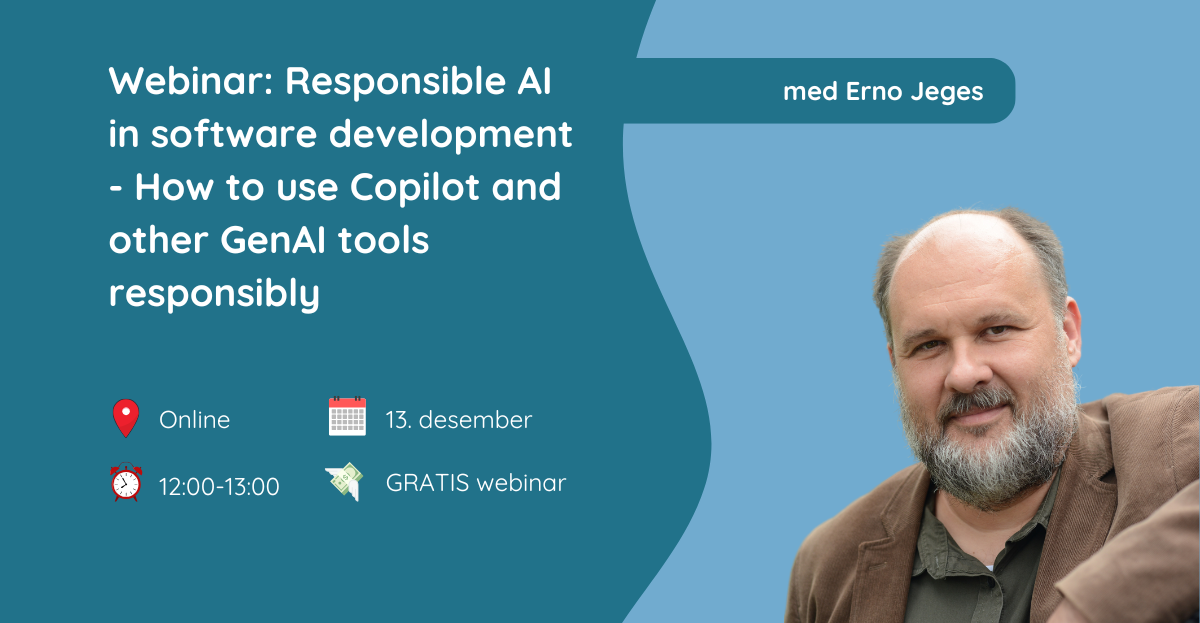

📅 Dato: 13. desember 2025

🕛 Klokken: 12.00-13.00

💸 Pris: GRATIS

📢 Språk: Engelsk

Er du klar for å bli med?

Responsible AI in software development

The rise of GenAI in software development

Robustness of the generated code

Learning responsible coding with GenAI

Presenter: Erno Jeges / Balazs Kiss

Erno has been a software developer for 40 years, half of which he has spent writing, and half breaking code. In the last ten years he has been focused on teaching developers how not to code. More than 100 classes in 30 countries add to his track record all around the world.

Balazs started in software security 15 years ago as a researcher and taking part in over 25 commercial security evaluations. To date, he has held over 100 secure coding training courses all over the world about typical code vulnerabilities, protection techniques, and best practices. His most recent passion is the (ab)use of AI systems, the security of machine learning, and the effect of generative AI on code security.

Er du klar for å bli med?